Peer Review and Research Funding: Innovating Selections

By Nicolas Sacchetti

Bert van den Berg’s extensive experience in the Canadian research system spans several decades, encompassing roles as a researcher at the National Research Council, an engineer in a high-tech company, and a position at the Natural Sciences and Engineering Research Council of Canada. His recent work has focused on examining various aspects of research and innovation policies, particularly the peer review process.

His research titled « Moving Beyond Peer Review with Performance Measures » was presented at the 4POINT0 Conference on Policies, Processes, and Practices for Performance of Innovation Ecosystems (P4IE 2022), from May 9 to 11, 2022.

Mr. Van den Berg’s initial involvement in developing open-source tools for research-scored competitions, particularly in support of a hackathon, led him to an analytical exploration of the data generated in these processes. This exploration culminated in the insights he shared in his presentation at the conference.

Typical research Founding Competitions Selection Process

- Application are scored by experts using criteria

- Scores are average

- Applications are ranked

- Budget are allocated top-down

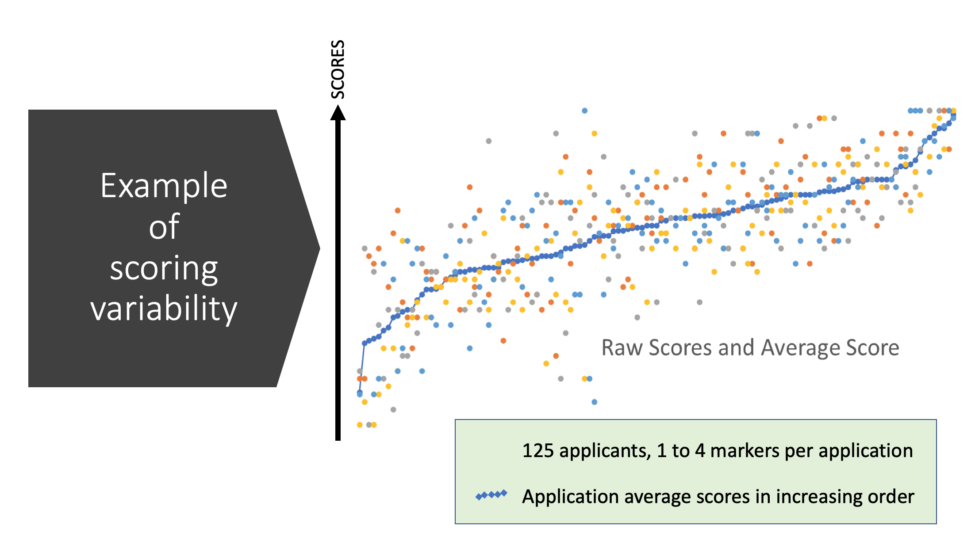

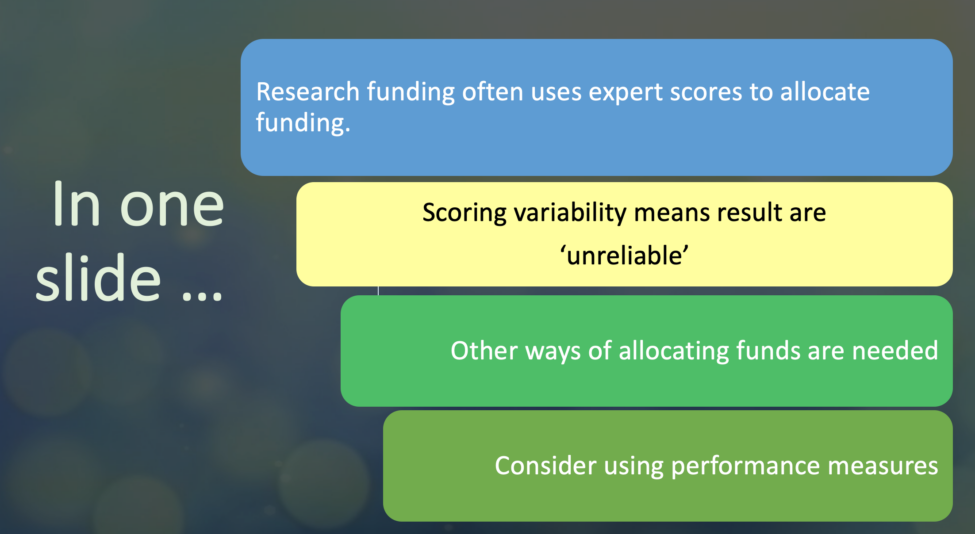

The author notes that this process is instrumental in allocating billions in funding annually. However, he identifies significant issues within this framework. Illustrating his point, Mr. Van den Berg references the Conference on Neural Information Processing Systems (NIPS 2014), where approximately a thousand abstracts were subjected to review. In an experiment, NIPS assigned the same 60 applications to two separate committees. The outcome was that both committees only agreed on their recommendations 43% of the time.

This level of inconsistency poses a challenge for those who value the peer-scored decision-making processes. Mr. Van den Berg further emphasizes this point by citing a study by Guthrie et al., conducted for the Canadian Institutes of Health Research, which suggests a broader consensus in the research community: ‘peer review is unreliable.’ »

Strategies to Address Scoring Variability

The paper discusses various approaches to reduce scoring variability.

Language Ladders to Enhance Evaluation Quality: Utilizing structured guidelines, known as language ladders, can standardize the evaluation process. This approach aims to provide a consistent framework for assessment, potentially improving the quality of evaluations.

Training for Reviewers: Recognizing that not all experts reviewing applications may be adept in peer review, training is suggested. This training is intended to equip reviewers with the necessary skills and knowledge to conduct more accurate and equitable evaluations.

Calibration of Scoring: This strategy involves identifying and addressing biases in the scoring process. Adjustments may be made to counteract consistent biases identified in the competition or in individual assessments. Although this can reduce variability in scoring, it is acknowledged that complete elimination of bias may not be achievable.

Inclusion of Quantitative Scoring Elements: The proposal here includes adding measurable criteria to the scoring system. This could involve evaluating factors such as the allocation of funds toward training, revenue generated from parking services, or metrics related to previous impact factors. The intention is to introduce quantifiable elements that could contribute to a more objective evaluation process.

Applying Metrics that Matter

According to Bert van den Berg, peer review is able to filter out the lowest-quality applications. Yet, he underscores the importance of a method to further distinguish among the submissions that remain. To this end, the author recommends integrating specific performance measures already included in the applications.

He proposes focusing on objective metrics such as the percentage of funds dedicated to training, the number of individuals planned to be engaged within a year, or perhaps the volume of publications produced in the past five years. In essence, any metric recognized as a credible indicator of performance is viable.

Mr. Van den Berg points out that key challenge lies in selecting performance measures that correlate with a greater impact in the field.

The author highlights that we are currently navigating uncharted territory in the realm of making more effective selections. He emphasizes the necessity of evolving our methods to enhance the decision-making process. This evolution, he suggests, requires the systematic collection of data and the careful structuring of experiments.

To accommodate this evolution, he suggests several approaches. One option is to prioritize applications that are statistically comparable but have higher scores in research planning. Another method could involve an experimental comparison between the highest-scoring applications in research planning and those with lower scores, to ascertain any statistically significant differences.

This methodology, while time-consuming and requiring a structured approach, holds the potential to significantly enhance our understanding of a program’s long-term impact.

« This is an opportunity to improve impact »

— Bert van den Berg

His vision is forward-looking, with an emphasis on gathering insights that will, in the future, provide a clearer understanding of what strategies are most effective and which ones yield the greatest impact relative to the resources invested. Bert van den Berg’s perspective underscores the importance of continual learning and adaptation in the pursuit of optimizing selection processes.

Ce contenu a été mis à jour le 2023-11-19 à 18 h 42 min.